Techstorm AI Forecast - October 2025 The Hype Intensifies!

Image: Depositphotos

This Techstorm Forecast has been a long time in the making. With all the daily AI noise hitting us from all directions, the main challenge was knowing when to put my foot down and decide that this is the snapshot I want to capture. Well, the time is finally here, and as you might suspect, this Techstorm AI Forecast is mainly focusing on the question of whether we’re in a bubble or not. (1)

The short answers? Yes, Probably, Definitely, and No, in that order. Let me explain in four parts below …

In my first, somewhat ironic, AI Forecast more than two years ago, I already then used the sub-heading “Hype Included”.

Having been a tech bubble collector for more than 25 years, I noticed that by 2023, the signs of a bubble were already hidden in plain sight. Fast forward to October 2025. Fueled by VC exuberance (FOMO), a stock market frenzy, exaggerated user expectations, copyright infringements, the explosion of vibe coding, and AI startups competing for world domination, the bubble will soon burst (or at least deflate).

If you don’t believe me, trust the expert(s). Scientists, billionaires, economists, Nobel laureates, opinion writers, and many other people smarter than me have debated this over the last several months.

People claiming there’s no bubble have to work hard to explain how some of the excesses in this hype are referred to as “standard AI business practice”. This all makes me think of what investor legend Sir John Templeton said in August 2000:

“The four most expensive words in the English language are ‘This time it’s different’.”

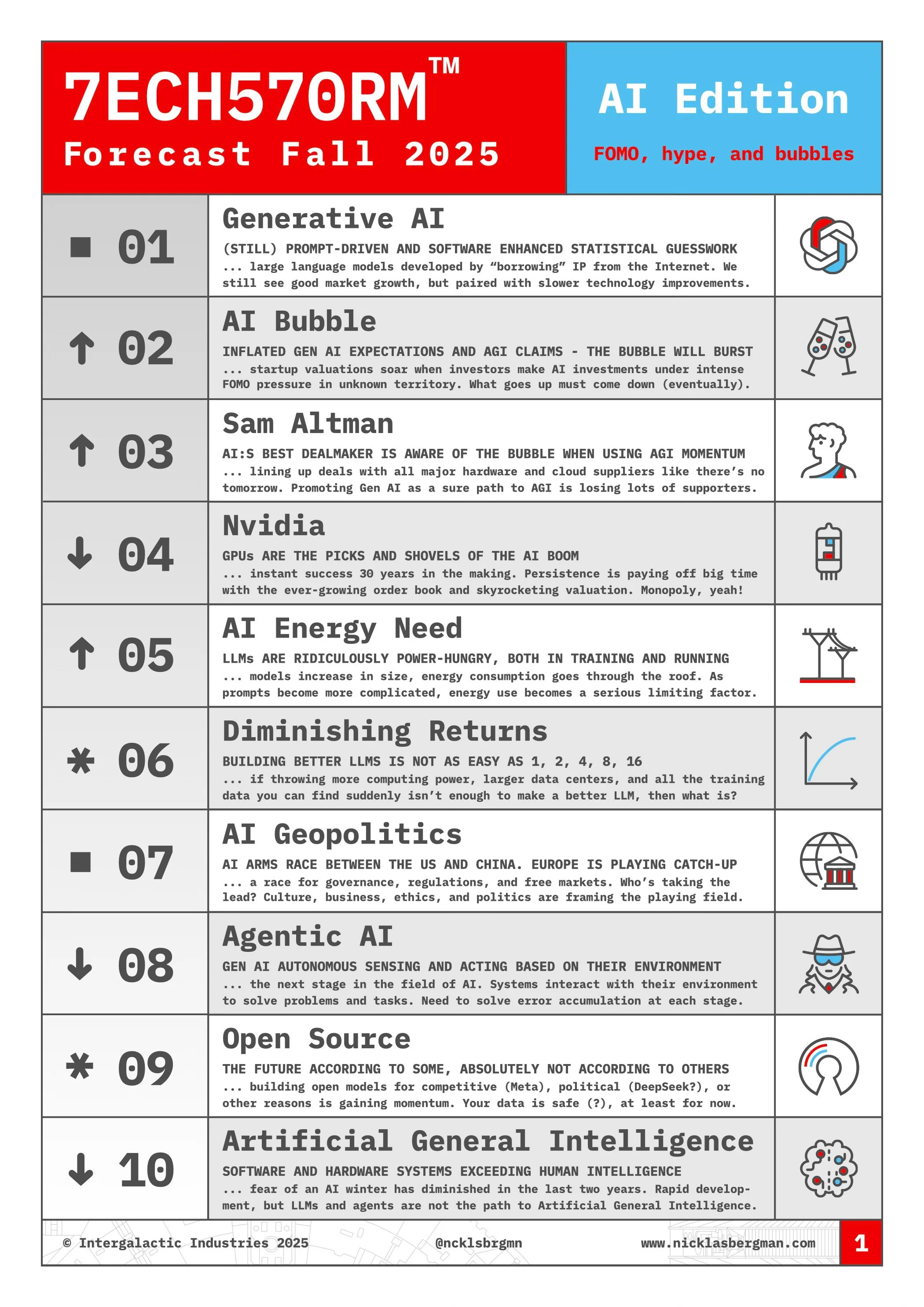

Time for the Top 10 List

For those of you who are visual thinkers (like me) and/or suffer from short attention span (like me), and/or are rushing between meetings (not me), here’s the Techstorm Forecast Top 10 list. If anything sparks your curiosity, scroll down and join me in going down the AI bubble rabbit hole.

1. Is There a Private Market Bubble?

First, the private market perspective, also known as the startup world. What we’re seeing now is very similar to the dotcom bubble from the late 1990s. Companies with impressive growth and claims about being close to Artificial General Intelligence (AGI). Investors are equally amazed, but their enthusiasm is partially fueled by a combination of FOMO and the fact that their coffins are full. They need to find companies to invest in to justify their existence (and collect management fees).

In technology investing, two questions need to be answered in times of disruption. First, how much value will this innovation create? Second, who will capture that value? What puzzles me is that the Generative AI ecosystem still isn’t mature enough to answer any of these questions. It’s unclear whether this is the right way to AGI (where the treasure at the end of the rainbow allegedly lies). Also, it’s equally unclear where the winners are in the value chain. Yes, we’re seeing huge profits among hardware suppliers, but there’s a lot of uncertainty about where else winners will be found.

What seemed to be a winner-takes-all scenario for LLM developers might, in the near future, transform into a commodity race. Despite spending billions on product development, LLMs are on the way to becoming low-margin utilities with little or no differentiation unless you’re a power user. Other utilities in the past, such as railroads, telephone networks, and fiber connectivity, have struggled to reach sustainable profit margins once the dust (hype) settled.

OpenAI is still the poster child for the Generative AI industry. Sam Altman, its CEO, is working hard to sell Generative AI to the world with the (yet unproven) claim that it’s the path to AGI. The latest valuation rumors suggest that at least some VCs believe him. OpenAI is one of the fastest-growing companies ever. Yet, since it’s losing money on every user query, a stable, sustainable business model is not within reach at the moment.

It’s also clear that OpenAI is losing market share, even though it is in a rapidly growing market. Interestingly, though, it’s mainly losing to Google, meaning that distribution is still an essential part of the equation.

Bubble? Yes

Repercussions? Mostly limited to entrepreneurs swinging for the fences and VCs investing at high valuations or in the wrong part of the value chain.

2. Are the Public Markets Overvalued?

There are some (relatively) strong arguments that this time is actually different from the dotcom era 25 years ago. The main difference is that tech companies today are growing in revenue while, at the same time, (mostly) being very profitable. That said, if the following (AI picks-and-shovels companies) share performances for the last six months aren’t signs of a bubble, then I don’t know what is:

AMD (AMD): +179%

ARM (ARM): +68%

Broadcom (AVGO): +102%

CoreWeave (CRWV): +253%

Nvidia (NVDA): +87%

Oracle (ORCL): +125%

One factor to consider is, of course, that startups today remain private far longer than before, meaning that we don’t see unprofitable listed companies as part of this AI boom. They can do that by taking advantage of a growing cohort of late-stage private investors, large, risk-willing credit markets, and creative funding through partners and hardware suppliers with strong cash flows. In the last couple of months, OpenAI has signed deals with data center suppliers worth up to 1 trillion USD, despite not having the money to pay for this, nor being anywhere close to making a profit.

These two quotes describe the current hype in an unusual, blunt way:

Jim Chanos, famous short seller, about the AMD deal: “Don’t you think it’s a bit odd that when the narrative is ‘demand for compute is infinite,’ the sellers keep subsidizing the buyers?”

Gary “The Generative AI emperor is naked” Marcus stated: ”Oracle’s new market cap, near a trillion dollars, up nearly 50% this week, driven largely by this one apparently non-binding deal with a party that doesn’t have the money to pay for the services, seems more bonkers than most.”

And then on top of all this, recent news that AI CAPEX spending is now a larger part of US GDP than consumer spending makes it clear to everyone (?) that this isn’t sustainable. When hundreds of billions of dollars are being invested in building this AI infrastructure, the question is when (and if) these companies will see meaningful returns on their huge investments.

This uncertainty is blurred by the fact that the MAG7 are cash machines and can invest as if there’s no tomorrow, at least for the moment. Adequate returns on these investments all depend on whether Generative AI is the holy grail of AI or if we will see other, more scalable AI technologies emerge and eventually overtake the LLM approach.

At which point the question arises whether these massive investments in computing power will find other uses or will be sunk costs. In hindsight, the railway tracks from the 19th-century boom were useful long after the bubble burst. The challenge with this potential bubble is that the GPUs powering the data centers have a lifespan of only a couple of years.

Bubble? Probably.

Repercussions? Paper fortunes are being wiped out if and when the bubble bursts. Also, the risk of a shockwave through the financial system.

3. Is Generative AI Overpromising?

As we soon enter year four of the Generative AI explosion, more and more people are beginning to raise questions about LLM scaling challenges. Not only do these systems require vast amounts of energy (both during training and running), but throwing more computing power and data at LLM development doesn’t seem to yield vastly improved models.

The challenge is whether Gen AI is a technological dead end or the path to AGI. On the contrary, we actually see emerging evidence of diminishing returns when scaling LLMs. One recent example is the underwhelming launch of OpenAI’s latest model, GPT-5.

Additionally, new entrants are challenging the large US LLM developers, and companies like China’s DeepSeek are gaining attention with their vastly cheaper approach to LLM training and scaling. Also, Alibaba published a paper last week that presented a solution to reduce the number of Nvidia GPUs needed to serve its AI models by 82%. Or, perhaps the solution to diminishing returns can be found outside the usual suspects? Maybe the solution lies in novel compression algorithms that enable running local LLMs on smartphones?

There’s also a growing trend toward developing specialized models that cater to a specific niche or use case, as seen in the latest OpenAI example in biotech research.

With these examples, it’s clear that Generative AI is only the first step in the development of artificial intelligence, and also that it’s a selective bubble. LLM developers overpromise and underdeliver, while simultaneously painting a vision of a near-future Artificial General Intelligence (AGI) within reach. Most of their impressive valuations are based on these claims and a future with a winner-takes-all AI market. I have yet to see any evidence of this or even a description of how Gen AI and LLMs can scale and learn faster/better/cheaper, all of which is needed to create AGIs from the current Gen AI stack.

Bubble? Definitely!

Repercussions? The risk of investing too much time, effort, and money in the wrong direction.

4. Are There Any Good AI Use Cases?

Generative AI is generating tremendous hype and exuberance, as well as use cases that are showing both promise and actual return on investment. Your social feeds are filled with self-proclaimed experts providing tips on how to improve performance, sales, due diligence, and whatnot. There’s a constant stream of AI webinars on how to implement AI strategies in your industry, all while CEOs are still struggling with the aftermath of getting the digitalization efforts from the last decade to actually work.

On the sobering side, I see clear signs that many are starting to realize Generative AI is not the holy grail it’s been promised to be. Instead, people see the limitations, reset expectations, and find practical applications based on what’s currently possible with the latest LLMs.

Having a Generative AI take notes in your client meetings makes perfect sense. Using a built-in LLM, trained on your data, to handle customer queries will free up time for your sales and support staff. Including an AI perspective early in your drug development process is a no-brainer, as it helps you save time and money by avoiding going down the wrong path. And maybe the best (or worst) example of human ingenuity: Having an AI fake expense reports, including receipts and all.

Just beware of “workslop”, the result of lazy people using Generative AI and letting their co-workers do the cleaning. In other words, using AI to create something that at first glance looks good, but on a closer look is more or less useless.

Used the wrong way, AI coding assistants are good (bad) examples of this. Vibe coding companies like Cursor and Lovable are among the fastest-growing startups ever, with valuations in the billions just a year after launch. I have tried several of these solutions, and while it’s easy to provide mock-ups and (very) basic apps, I always run into problems sooner or later when bugs occur. The challenge is to debug something you didn’t create yourself. It takes a lot of effort to understand the code and identify the causes of crashes. Recent statistics show that I’m not alone here. Time will tell whether vibe coding can overcome this hurdle, reach the masses, and become a competitive way to build software.

Despite the challenges above (and maybe not yet), the use of basic AI tools will be essential for all incumbents who want to stay competitive. Already, many large investors are placing future AI-related risks at the top of their evaluation of investment opportunities. Ignoring this will most likely limit your competitive advantage and destroy significant value down the road.

Bubble? No.

Repercussions? Yes, but only if you ignore it.

In these strange times, we see enormous paper fortunes being created, and I’m sure we’ll see enormous (and real) losses from Generative AI investments in the (not so distant) future. As with all bubbles, the question of WHEN it will burst is essential and hard to answer.

Despite the obvious signs of a bubble and the fact that Generative AI has a scaling problem, don’t ignore this. Don’t look the other way and think it will pass. Embrace these new tools, put them to the test, and make them work for you. Be curious and a bit skeptical, an approach that works for most emerging technologies.

Independently, whether the challenge of scaling Generative AI models will be solved, or when a completely different AI technology emerges, you will be prepared for what’s to come.

Embrace uncertainty!

(1) This article was posted by Nicklas Bergman on his substack on October 29, 2025 (ncklsbrgmn@substack.com) here.

Nicklas Bergman

Nicklas Bergman

Nicklas is a proud founder, curious funder, applied futurist. Epic failures and a couple of home runs. Deep tech angel + EIC Fund IC. Technology interpreter and rabbit hole explorer. Tech bubble collector. Can’t wait to go skiing.