The Adaptive Enterprise: Why AI at Scale Requires a New Organizational Metabolism

Image: Depositphotos

Over the past eighteen months, corporate language shifted from curiosity about AI to impatience with results. Adoption is widespread. Enterprise impact is not.

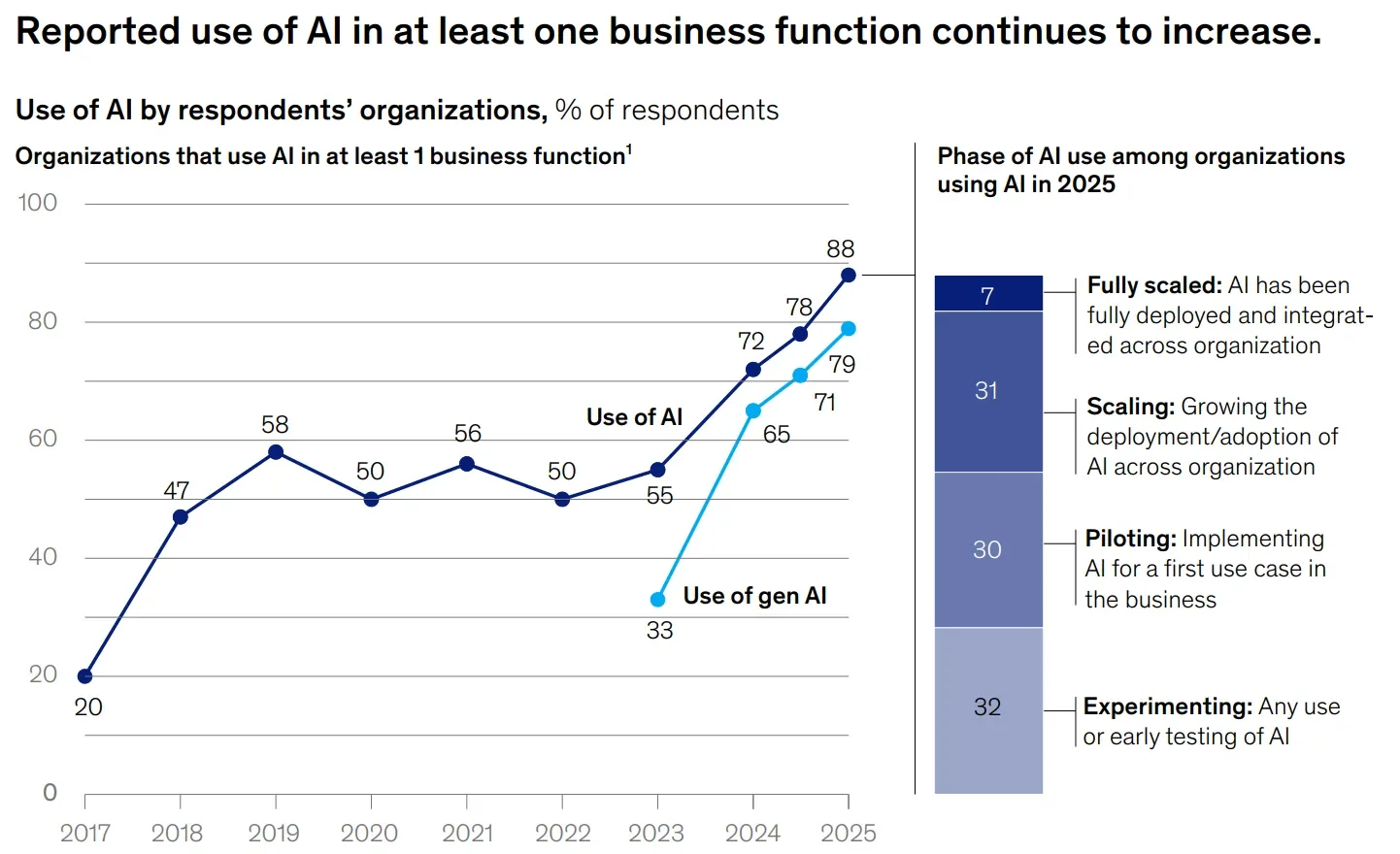

McKinsey’s State of AI 2025 found that about 88% of companies now use AI in at least one function and 62% are experimenting with AI agents. Only around 23% report scaling an agentic system somewhere, with single-function scaling rarely breaking into double digits. Only 39% report enterprise-level EBIT lift.

Figure 1. Adoption without absorption: 88% of organizations now use AI, 62% are experimenting with AI agents, yet only around 23% have scaled them across functions. (Bar/line chart showing adoption vs. scaling gap.) Source: McKinsey & Company, “The State of AI in 2025: Agents, Innovation, and Transformation.”

The same signal shows up in recent market disclosures. For example, Salesforce reports more than 5,000 Agentforce deals since late 2024, with 3,000 paid, and a 119% rise in agent creation within six months. Snowflake logged more than 15,000 enterprise agents deployed by over 1,000 customers in three months.

Momentum is real. The wiring is the bottleneck.

The metabolism problem

Most organizations now generate intelligence. Very few can move it through the enterprise with speed, integrity, and context. Pilots bloom in isolated functions. They struggle at the handoffs where policies, processes, and people intersect. MIT’s State of AI in Business 2025 estimated that 95% of enterprise AI pilots fail to drive rapid revenue acceleration. BCG’s 2025 research identified only 5% of companies as true leaders in value capture, closely mirroring McKinsey’s narrow slice of high performers.

Intelligence is in the bloodstream; the circulatory system is underdeveloped. Data rarely arrives when decisions are made. Policy is not expressed where work happens. Feedback from outcomes doesn’t return to improve the next case. The result is activity without compounding value. You can recognize a low metabolic rate when intelligence pools inside tools and teams, handoffs strip context and slow cycle time, and there is no reliable path from outcome to learning.

Raising the metabolic rate requires re-engineering how intelligence circulates through the enterprise. Not adding more tools. It demands a different operating fabric, one that connects systems of record to systems of intelligence capable of reasoning, learning, and enforcing trust in motion. Without that connective tissue, even the best AI deployments remain islands of potential rather than catalysts for compound advantage.

From automation to adaptation

In earlier essays, I argued that the real revolution is not about tools. It is about teams, trust, and transformation. That is why agentic AI matters. It turns software from a set of tasks into a set of teammates that can plan, coordinate, and improve with feedback. The next phase is not more autonomy in isolation. It is adaptation across the business. An adaptive enterprise turns every decision, every workflow, and every outcome into new intelligence that can be reused.

That ambition changes the questions leaders ask. Instead of “Which model?” and “Which use case?” the more durable questions are “How does intelligence travel?” and “How quickly do we convert learning into better work?”

From systems of record to systems of intelligence

For three decades, systems of record optimized storage, access control, and periodic reporting. That scaffolding does not scale AI. Senior leaders need an architecture that treats intelligence as a first-class capability, not as a bolt-on to a database.

A system of intelligence sits on governed data and policy, learns from live context, and acts inside the workflow with human oversight. It changes what we fund, how we ship, and what we measure.

Data as products, not projects. Curate canonical, reusable data products with contracts, lineage, SLAs, and owners so teams build on stable foundations and stop re-plumbing pipelines.

Reasoning as shared services. Standardize retrieval, grounding, summarization, and decision support as platform services callable by any workflow so learning spreads instead of staying trapped in teams.

Policy in the path of work. Express rules as code and bind them to identity and context so decisions are consistent, auditable, and explainable at the moment of action.

Memory with provenance. Preserve evidence behind every decision so models and people learn from outcomes without guesswork.

Operating cadence. Shift from projects to products. Ship frequently. Retire brittle logic as shared services replace it.

The executive shift is practical. Budgets move from scattered use cases to an intelligence platform. Decision rights move from ad hoc committees to named owners of data products, policy, and assurance. Metrics move from model accuracy in isolation to time from event to decision, rate of governed component reuse, and first-touch exception resolution.

In health plans, that looks like authorizations, claims, and correspondence drawing on the same governed evidence, the same policy engine, and the same memory. In other industries, it is the same physiology with different labels. The organizational metabolism improves when these capabilities move together: governed data, shared reasoning services, policy in the workflow, and memory with provenance.

What the leaders do differently

McKinsey, BCG, Deloitte, and the best operator stories point to the same pattern. High performers are not deploying more tools. They are rewiring how work runs by:

Redesigning workflows around AI. McKinsey finds high performers are roughly three times more likely to rebuild processes rather than bolt AI onto legacy steps.

Assigning visible executive ownership. Nearly half have senior leaders accountable for AI outcomes. That rate is roughly three times higher than in the rest of the market.

Embedding human-in-the-loop as policy. Human validation is not an exception path. It is a standard checkpoint that regulators and boards expect, and it is one of the clearest differentiators of top performers.

Investing in platform and data first. High performers direct more than 20% of digital budgets to AI and data platforms, roughly double the allocation of others. Deloitte reports leaders allocating about a third of digital budgets to AI and the underlying data and platform services that make reuse possible.

Measuring adaptability, not just activity. Speed to decision, reuse of governed components, and first-touch exception resolution become everyday metrics. McKinsey’s high performers pair efficiency with growth and innovation objectives, and as a result report materially higher EBIT impact than peers.

The payoff is visible. BCG’s 2025 analysis shows future-built leaders achieving about 1.7× revenue growth, 1.6× EBIT margins, and 3.6× three-year TSR relative to peers. That is what compounding looks like in practice.

Figure 2. A small elite captures outsized value: “future-built” leaders deliver about 1.7× revenue growth, 1.6× EBIT margin, and 3.6× three-year TSR versus others. Source: BCG, The Widening AI Value Gap (Oct 2025), Exhibit 1.

Architecture for a healthier metabolism

To scale intelligence, enterprises need two layers that work together: a fabric that moves context and a governing grid that makes trust enforceable.

The cognitive layer behaves like a fabric. It carries context, memory, and data between systems and teams in real time. Reusable data products replace one-off pipelines. Event streams keep decisions current. Retrieval and summarization are shared services rather than bespoke scripts hidden in teams. Provenance and lineage travel with every artifact so that explanations are routine, not heroic.

The governance layer acts like an operating grid. It sets the enterprise rules of engagement. Identity and authorization define who and what may act. Policy sits inside the workflow rather than on paper. Human review points are explicit and measured. Audit logs and explanations are generated by default. Orchestration balances cost, speed, and risk so that agents do not optimize one dimension at the expense of the others.

When these layers are present, the enterprise learns without drifting and scales without losing the ability to explain itself to regulators, customers, clinicians, auditors, and boards.

Industry lenses: healthcare and beyond

Healthcare shows the stakes clearly because complexity and scrutiny are both high. Consider prior authorization. In a traditional model, a request bounces between intake, clinical review, benefits, and correspondence, with context lost at each handoff. In an adaptive model, an intake service classifies and extracts information instantly. Retrieval pulls longitudinal history, coverage rules, and current guidelines. Review applies policy and confidence thresholds that route ambiguous cases to clinicians. Communication is generated in plain language for providers and members, with links to the evidence that supported the decision. Every step writes to the same governed record. Outcomes feed learning loops automatically. Turnaround falls. Consistency rises. Clinicians move to higher-value judgment. Most importantly, each automated step is explainable.

The same physiology applies in other sectors. In manufacturing, a fabric plus grid lets design, supply, and quality operate on a common, live context instead of trading stale files. In financial services, credit, risk, and compliance work against shared rules that produce explanations in line with each decision. In the public sector, integrated casework compresses the time between citizen input and agency action, while creating an auditable story of why things happened.

Architecting for scale: governance, safety, and trust

Scaling AI is as much a governance problem as it is an engineering one. Trust must be designed as a system property. That means provenance on every output, human review where risk is highest, and clear accountability when software acts on behalf of people. Without this spine, pilots grow in number but not in impact.

Senior teams should treat governance as an operating discipline with explicit design choices:

Risk-tiered workflows. Classify decisions by consequence. Automate low-risk, low-ambiguity cases. Route low-confidence or high-impact cases for human review. Log both paths with equal rigor.

Policy binding. Enforce rules at runtime and keep a human-readable explanation alongside machine traces to simplify audits.

Assurance lifecycle. Version models, agents, and prompts. Use approval gates, shadow tests, rollback plans, and deprecation schedules to reduce change risk.

Role clarity. Name owners for data products, policy, model stewardship, and decision calibration so outcomes have accountable owners.

Evidence and audit. Preserve inputs, context, rationale, and result to answer “what happened” and “why” without a special project.

When this spine is present, human-in-the-loop becomes a growth lever rather than a brake. Review effort concentrates where it changes outcomes. Exceptions resolve faster because context is intact. Leadership gets forward visibility into risk, not just backward reports. In regulated domains like healthcare, that is what allows intelligence to scale without eroding trust.

Why human-in-the-loop is now a growth lever

Across the past year, governance boards became commonplace and regulators emphasized human validation for higher-risk decisions. McKinsey’s data shows that reliable human review is a top marker of high performers. In production environments, inaccuracy shows up as the most frequent risk, and explainability gaps are common. This is why explicit human validation and full provenance need to be designed into the flow rather than added later.

The conclusion is practical. Human judgment should regulate the system the way autonomic controls stabilize a body under stress. The goal is speed with stability. The enterprises that understand this are moving faster because their leaders trust the system to self-correct.

The new executive playbook

Re-engineer the flow of intelligence. Map where decisions stall or lose context. Replace static reports with event-driven services and shared memory. Prioritize platform services like retrieval, summarization, validation, and correspondence that multiple teams can reuse, and track reuse rate as a leading indicator.

Redefine roles for human-AI symbiosis. Create model stewards, policy architects, and decision calibrators who bridge domain and technical depth. Invest in literacy programs that align values and escalation paths—not just tools training.

Redesign the operating model for continuous learning. Shorten release cycles. Treat every workflow as a living experiment. Track adaptability metrics alongside unit cost and throughput.

Fund the platform, not just the pilot. The budget exists. Consolidate scattered proofs into shared foundations that compound. Name an owner at the executive level for the operating grid and hold the line on standards.

Anchor on outcomes that exceed efficiency. Pair efficiency with growth and innovation goals. McKinsey’s leaders that do this report materially higher EBIT impact than peers.

Why timing matters

The past 45 days confirmed that the agent wave is not theoretical. Salesforce disclosed thousands of live agent customers with accelerating creation rates. Snowflake showed that when agents live next to governed data, deployment velocity jumps. MIT’s failure rate clarifies why programs stall, and BCG’s distribution explains the value gap. Deloitte’s latest read shows budgets shifting into platforms.

Executive search firms report that demand for Chief AI Officers and AI transformation leaders has tripled in 2025. The labor market is reorganizing around the work of absorption, not just adoption. The difference maker now is architectural readiness: governed data products, shared reasoning services, policy in the workflow, and a live memory.

Economics of connection

McKinsey estimates enormous global value from AI over the decade, yet implies that less than half will materialize without enterprise-grade integration. We have seen this movie before.

In the early internet era, value accrued to firms that mastered the protocols and operating models that connected everything else. The same will be true here. Systems of record that remain isolated will plateau. Systems of intelligence that connect data, policy, and action will compound. The next advantages accrue to enterprises that metabolize intelligence faster than their environment changes.

A closing view

If 2023 was discovery and 2024 was pilots, 2025 became wiring. The years ahead belong to the adaptive enterprise. It treats intelligence as a company-wide utility. It expects every workflow to learn. It measures trust and transparency as rigorously as cost and speed. It accepts that autonomy without accountability is not scale, and that accountability without speed is not progress.

Autonomy was never the destination. Adaptation is. When insight moves as quickly as action and when actions feed learning in plain view, AI stops being an add-on and starts becoming the enterprise itself.

For health plans and health systems, the path is clear. Put prior authorization, appeals, and correspondence on the same governed evidence and policy engine. Keep clinicians in the loop where judgment matters. Track reuse and time to decision as first-order metrics. As this circulatory system strengthens, access improves, friction falls, and trust returns. That is how transformation becomes visible—to members, clinicians, and regulators alike.

Not more pilots. A healthier metabolism.

This article was posted by Rajeev Ronanki on his substack on November 13, 2025: here.

Rajeev Ronanki

Rajeev Ronanki continues to reimagine the future of healthcare by harnessing the power of AI and data to provide consumers with predictive, proactive, and personalized insights at the intersection of healthcare supply and demand. His experience spans over 25 years of innovation-driven industry and social change across healthcare and technology, and he regularly speaks on topics related to navigating the future of healthcare, harnessing data-driven insights, and delivering personalized experiences. In November 2021, Rajeev released “You and AI: A Citizen’s Guide to AI, Blockchain, and Puzzling Together the Future of Healthcare,” which has become an Amazon Best Seller.

When he served as the President of Carelon Digital Platforms, Rajeev led efforts to transform Elevance Health into a digital platform for health and wellbeing. He and his leadership team collaborated with internal and external partners to expand virtual care, create a longitudinal patient record to improve care and reduce overall administrative burden, deploy AI to increase auto-adjudication of claims and expedite manual claims review processes, modernize the provider data lifecycle into a single source of truth, and pilot innovative solutions to transform the way consumers interact within the healthcare ecosystem.

Before Elevance Health, Rajeev was a partner at Deloitte Consulting, LLP, where he established and led Deloitte’s life sciences and healthcare advanced analytics, artificial intelligence, and innovation practices. Additionally, he was instrumental in shaping Deloitte’s blockchain and cryptocurrency solutions and authored pieces on various exponential technology topics such as artificial intelligence, blockchain, and precision medicine.

Rajeev obtained a bachelor’s degree in mechanical engineering from Osmania University in India and a master’s degree in computer science from the University of Pennsylvania.