Sometimes the Veneer of Intelligence is Not Enough

By Doug Lenat

We all chafe against the brittleness of SIRI, Alexa, Google… every day. They’re so incredibly useful and yet so incredibly… well, stupid, at the same time. For instance:

Me to Google: How tall was the President of the United States when Donald Trump was born?

Google to me: 37 million hits, none of which (okay, I didn’t really read through all of them) tell me the answer. Almost all of them tell me that the Donald was born on June 14, 1946.

Me to Google: How tall was the President of the United States on June 14, 1946?

Google to me: only 6 million hits this time, but again it doesn’t appear that any contain the answer.

Me to Google: Who was the President of the United States on June 14, 1946?

Google to me: 1.4 million hits, and this time most of them are about Donald Trump but at least some of them contain sentences that reveal that the President then was Harry S. Truman.

Me to Google: How tall was Harry S. Truman?

Google to me: Now the hits (and info box) tell me the answer millions of times over: 5’ 6”.

Of course, using Google and other search engines day after day, decade after decade, has trained me by now so I would never ask that original question to begin with, How tall was the President of the United States when Donald Trump was born? I know better, and I’d put together a little plan to ask a sequence of queries much like I ended up doing.

And yet that logical inference is one of the hallmarks of human cognition. We know a lot of things, but so do dogs and search engines. What separates us is that we also can deduce things, at least if they only require a couple reasoning steps, and we do that so quickly we aren’t even aware of doing it, and we expect everyone else to be able to. Witness every time you use a pronoun, or an ambiguous word, or an analogy or metaphor, or an ellipsis.

Fred was mad at Sam because he stole his lunch.

Who stole whose lunch? Your brain decodes that into the image of Sam stealing Fred’s lunch. Your brain doesn’t stop there; you can answer a ton of additional questions just from that one sentence, like: did Sam know he was going to steal it before he did it (yes, probably, at least a little before)? Did Fred immediately know his lunch had been stolen (no, probably not until he went to fetch it from the refrigerator)? Why did Sam do it (he might have been hungry, or wanted revenge on Fred for some wrong Fred committed, or maybe Sam just mistook one bag for another and the criminal intent is all in Fred’s mind)? Are Fred and Sam about the same age (probably, otherwise it would have been worth mentioning)? Were Fred and Sam in the same building on the day this happened? And your brain goes even further and abduces a whole rich panoply of additional even less certain (but still quite likely) things: Where was the lunch (in a bag in a refrigerator)?

Some linguists will tell you, and themselves, that that assignment of pronouns is because of some grammar-related thing, like “Sam” being the proper noun closest to “he”. To that I give them this alternate sentence I could have written:

Fred was mad at Sam so he stole his lunch.

I’ve just changed one word, “because” to “so”. But now clearly Fred is the thief. And notice that in neither case did you for an instant think that Fred stole Fred’s lunch or Sam stole Sam’s lunch. In fact I could have given you this pair of sentences back to back:

Fred was mad at Sam because he stole his lunch. So he stole his lunch.

Now Sam is the initial thief, and then Fred is stealing Sam’s lunch to get back at him (and/or just so he won’t go without lunch that day, if it’s all happening on the same day.)

All this is akin to what Dan Kahneman calls thinking slow; though, as we’ve seen here, short reasoning chains can happen so quickly we aren’t even consciously aware of going through them. I mentioned ellipsis, above, and while sometimes that is an explicit “etc.”, sometimes it’s just another sentence which presumes you inferred certain things, or will infer them once you read this next sentence. That’s part of what happened in the sentence pair above, but this third sentence is an even clearer example:

Fred was mad at Sam because he stole his lunch. So he stole his lunch. Their teacher just sighed.

Wait, what teacher? Presumably Sam and Fred are students, and all this drama happened in school, and they are in the same class, and they brought their lunches to school, and they’ve done things like this before,… and so on. Those three dots contain multitudes, but even each innocuous-looking sentence break, above, contains multitudes.

This is a large part of the reason why unrestricted natural language understanding is so difficult to program. No matter how good your elegant theory of syntax and semantics is, there’s always this annoying residue of pragmatics, which ends up being the lower 99% of the iceberg. You can wish it weren’t so, and ignore it, which is easy to do because it’s out of sight (it’s not explicitly there in the letters, words, and sentences on the page, it’s lurking in the empty spaces around the letters, words, and sentences.) But lacking it, to any noticeable degree, gets a person labeled autistic. They may be otherwise quite smart and charming (such as Raymond in Rain Man and Chauncey Gardiner in Being There), but it would be frankly dangerous to let them drive your car, mind your baby, cook your meals, act as your physician, manage your money, etc. And yet those are the very applications the world is blithely handing over to severely autistic AI programs!

I’ll have some more to say about those worries in future columns, but my focus will be on constructive things that can be done, and are being done, to break the AI brittleness bottleneck once and for all.

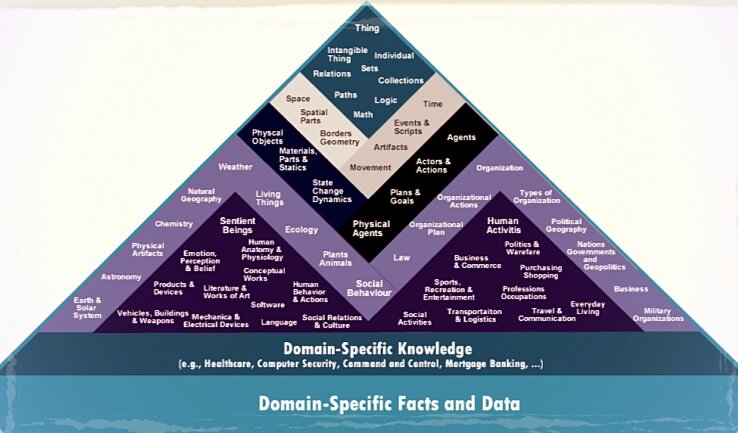

Knowing a lot of facts is at best a limited substitute for understanding. Google is proud that its search bar knows 70 billion things, and it should be proud of that. By contrast, the Cyc system only knows 15 million things, and relatively few of them are what one would call specific facts, they’re more general pieces of common sense knowledge like “If you own something, you own all its parts” and “If you find out that someone stole something of yours, you’re likely to be mad at them” and “If someone wants something to be true then they’re more likely to act in ways that bring that state of affairs about”. Google’s 70 billion facts can answer 70 billion questions, but Cyc’s 15 million rules and assertions can answer trillions of trillions of trillions of queries – just like you and I can – because it can reason a few steps deep with/about what it knows. Cyc’s reasoning “bottoms out” in needing specific facts, such as the birth date of Donald Trump, but fortunately those are exactly the sorts of things it can “look up” just as you or I would.

There are several important elements of this approach to Cognitive Computing that I haven’t talked about here, and plan to cover in future columns:

The importance of having a formal representation for knowledge, so that inference can be automated. While it’s vastly more convenient to just leave things in natural language, it’s vastly more difficult for programs to understand. There are of course some things that programs can do without really understanding, like recognize patterns and make recommendations, and AI today is a wonderland of exactly such applications.

The importance of the formal representation language being expressive enough to capture all the sorts of things people say to each other, the things one might find in an ad or a novel or a news report. Drastically less expressive, simpler formal languages (such as RDF and OWL) focus on what can readily be done efficiently, and then may try to add on some tricks to recoup a little of the lost expressivity, but that’s a bit like trying to get to the moon by building taller and taller towers. By contrast, what I’ve found necessary is to force yourself to use a fully expressive language (higher order logic) and then try to add on some tricks to recoup lost efficiency.

The general mechanism and the specific steps by which a system like I’ve been talking about can be “told about” various online data repositories and services, so that it can know how to access them when it’s appropriate and necessary to do so. For instance, it needs to understand, just like you and I do, what sorts of queries Google is and is not likely to be able to answer.

Most significantly of all, for Cognitive Computing in the coming decade, is how this sort of “left brain” deduction, induction, and abduction can collaborate and synergize with the sort of “right brain” thinking-fast that all the rest of AI is, today.

To give just a sketch of what I mean by this synergy, I’ll close with an example from a project we did a few years ago for the National Library of Medicine (one of the NIH). Medical researchers were initially very optimistic about genome-wide association studies building up databases of correlations between patient DNA mutations and the particular disease or condition they were suffering from. But they lamented the high “noise” level in that, because it couldn’t separate correlation from causation. Cyc was able to reason its way to suggest various alternative plausible causal pathways, in some AßàZ correlation cases, where there were independently testable hypotheses here and there in the pathway, hypotheses which could then be tested statistically by going back to patient data. It’s that back and forth reasoning, using big data and causal reasoning and big data again, which is the main path forward I see for achieving true Cognitive Computing – neither hemisphere of our brain suffices, on its own, and neither of the two AI paradigms suffices, on its own.

This article was originally published in COGNITIVE WORLD in 2017.